written by Tobias Leemann

Having introduced the class of General Purpose AI (GPAI) models defined in the EU’s the AI Act in my previous post, we summarize regulatory requirements for this class of models in this post. Yet, some requirements are quite abstract and will be made more explicit in the GPAI Code of practice, which is currently being finalized.

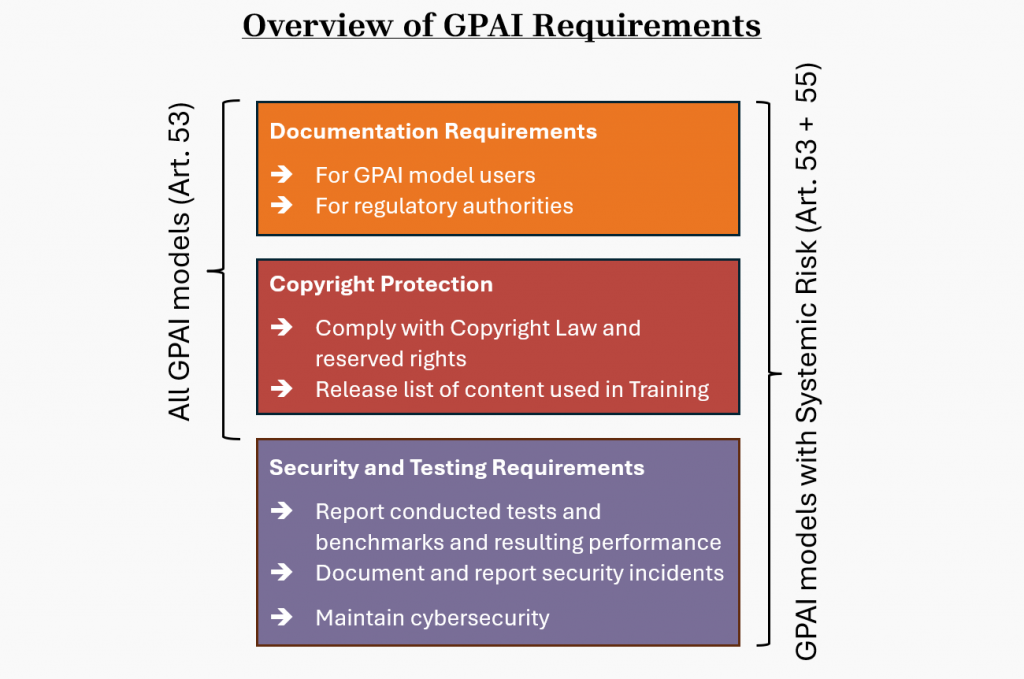

An overview of the GPAI requirements found in Articles 53 and 55 of the AI Act is visualized below:

Standard GPAI models

The following principles apply to all GPAI models (Art. 53):

Documentation. GPAI providers are required to keep records about the training and testing performed with their models. This information must be provided to the EU’s AI Office and national authorities. Information about the capabilities and usage of the model must also be provided to customers using these GPAI models, such that they can assess the performance characteristics of the model at hand. These requirements are relaxed for Open Source GPAI models in Art.53(2). The complete list of required information can be found in Annex XI and Annex XII of the AI Act.

Copyright Protection. GPAI systems must comply with existing copyright law and respect reserved rights. To increase transparency in this regard, GPAI providers must release a „detailed summary“ of content used for training the models as stated in Art. 53(1b).

How to prove compliance. Providers can prove their compliance by following the Code of Practice but may also leverage different „adequate means“ to prove their compliance, which however entails a much higher legal risk.

GPAI with Systemic Risk

Additional requirements are put in place for models with systemic risk (Article 55). These models only include the the most-powerful models of their time. In case an AI model is considered systemic risk, most notably, its provider must perform additional evaluation with state-of-the-art benchmarks and security testing, e.g., adversarial and penetration testing, and report the result to the AI office or national authorities. In case the GPAI model consists of multiple interacting components, tools, etc. a summary of the system architecture must also be provided if applicable. This may be one step towards their duty to assess and mitigate the systemic risk. They must further maintain an adequate level of cybersecurity for their physical infrastructure to prevent abuse.

Discussion

Most of the documentation requirements outlined in Annex XII are relatively standard and resemble what one might expect from a typical user manual—such as usage guidelines and the intended purposes of the models. However, the sections dealing with copyright and transparency around training data pose more significant challenges. Specifically, providers are required to release information about data used during training, testing and validation, where applicable, including the type and provenance of data and curation methodologies. So far, the data used to train state-of-the-art LLMs and its pre-processing is one of the best-kept secrets in the industry. It remains to be seen how much detail the AI Office will ultimately demand, and whether this level of transparency could trigger a wave of copyright litigation (it is fairly clear that almost all state-of-the-art LLMs are trained on proprietary content). Taking a look at the transparency section of the Code of Practice in its draft stage suggests that only a really coarse summary without an exhaustive listing of all individual data sources will be required. At the moment, it thus looks like LLM providers will get off lightly once more.

When it comes to GPAI models with systemic risk, evaluation and security testing is no longer optional but has become a requirement. While most providers already implement these steps individually due to basic business needs (no customer wants to work with an unsafe AI), they will probably need to follow a common standard from now on. Providers of these most powerful models are also required to report incidents during the deployment phase of the model. This requirement is new as well, and the notion of an “incident” will also need to be further specified in future refinements. Furthermore, it is yet unclear whether these incident reports will be made public or not. The code of practice will particularly detail and specify the requirements in Articles 53 (GPAI Requirements) and Article 55 (GPAI with systemic risk) and will be discussed in a future post once its final version becomes publicly available.

Disclaimer: This post is a summary of notions from of the AI Act. It is non-exclusive and does not constitute legal advice.